In 2007, a group of friends in Silicon Valley launched a start-up for hosting computer code on a website that could be shared openly, with minimally restrictive use policies. The site, GitHub,

functions like a social network for coders, where pictures of last night’s dinner are replaced with lines of code from ongoing or newly created projects. As the name suggests, the site functions not only as a hub for code repositories, but also

leverages git, an open source version control system developed by Linus Torvalds, the creator of one of the most successful open source ventures of all time—the Linux operating system.

In 2010, an Australian economist launched Kaggle, a start-up with the goal of solving wide-ranging data analytics and machine learning problems—from predicting HIV progression

and nerve identification in ultrasounds, to speech recognition and passenger screening algorithms. Kaggle hosts open competitions on the web, offering prizes to the person or team that develops the best solution to a stated problem given an open dataset.

GitHub became the natural home for the code written for Kaggle contests, and is now home to thousands of repositories containing code from past competition winners and participants.

In 2012, Netflix published an article in The Medium outlining the rationale behind its stated preference for using and developing open source

software as it transitioned to a cloud-based system. The author lists many of the values inherent in success of GitHub and Kaggle, principal among them the need for consistent, updated documentation and quality control. In the years that have followed,

Netflix-OSS has made significant contributions to a myriad of open source tools, including many in daily use at Dewberry, such as Jupyter, Atom, and Papermill.

In 2017, Google acquired Kaggle and Microsoft followed suit in 2018, acquiring GitHub for $7.5 billion. Today, Google and Microsoft are the top contributors to Open Source projects on GitHub. Google, Microsoft, AWS, Netflix, and many other tech firms

have developed tools and workflows to manage truly big data, with libraries for distributed and geospatial computing. Through open sourcing, these tools are available now, out of the box, built on top of proven frameworks managed by organizations

such as Apache, OSGeo, and NumFOCUS.

Constraints of the Desktop PC

For the past two decades, flood risk analyses have been performed on a desktop computer with a reasonable latency for processing and computation. The size and scale of the datasets were well balanced with computing power and software used for analysis.

As a result, flood studies have been executed at a steady pace, and communities have received updated risk profiles along coasts and inland waterways across the country.

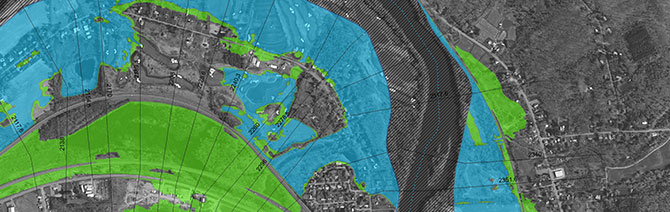

An example of a flood map that we created to help show the risk profiles along waterways for a community.

Recently, we’ve seen expansive growth in the size and complexity of required datasets, with improvements in temporal/spatial resolution, coverage, and availability. Our ability to interact with larger, more complex datasets hasn’t kept pace,

in part because of the limitations of the proprietary GIS tools we use. These tools, developed to work exclusively on Windows operating systems, and optimized for the constraints of a desktop PC, do not scale well to the modern cloud computing environment.

As a result, we’re constrained to the limited memory and high latency of ‘local’ computers, as data transfer across servers slows processing down.

At Dewberry we are determined not be limited to the size, quality, or scale, of analysis we can provide. By leveraging cloud computing resources, we’re able to perform continental scale computations and analysis in minutes or hours. Open source

software combined with open standards and open data allow us to do this.

The Move to Open Source

Open source innovations in GIS and cloud computing have changed the landscape. There is no longer a single provider limitation to the tools available for analysis. Proprietary and desktop tools will always have a place on the workbench, but will now share

the space with open source tools and cloud infrastructure.

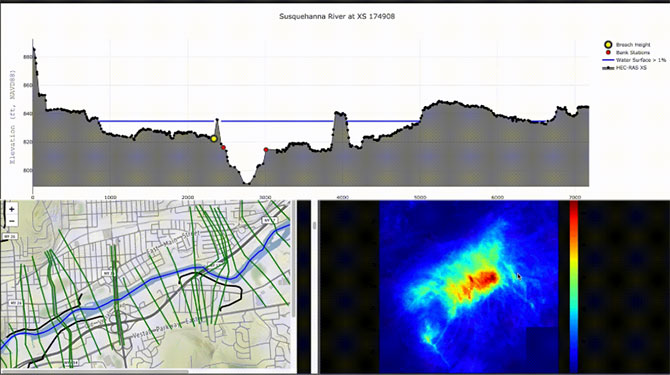

Screenshot from a Dewberry presentation at JupyterCon that shows an example of custom tools developed for a study in New York using Jupyter Lab.

Our federal partners are increasingly relying upon open source software solutions. With the release of HEC-RAS 5, the U.S. Army Corps of Engineers (USACE) flagship hydraulic modeling software removed support for proprietary GIS tools and incorporated

the open source GDAL suite to perform geospatial functions intrinsically. The Federal Emergency Management Agency has been moving in this direction as well, announcing in 2018 that Hazus will be transitioned to an open source platform. The National

Oceanic and Atmospheric Administration, U.S. Geological Survey, and USACE have many current projects underway on GitHub.

The opportunities to improve the tool state for flood risk analysis are everywhere. For many years, we’ve been developing tools using open source libraries at Dewberry to build our workflows. We’re passionate about exploring ways to improve

the services we offer, and are striving to make use of—and contribute to—the many exciting tools in development by the open source community.